How Does Keras Train Lstm Without Doing Same Data Again Every Epoch

Epoch vs Batch Size vs Iterations

Know your lawmaking…

You must have had those times when yous were looking at the screen and scratching your head wondering "Why I am typing these three terms in my code and what is the difference between them " because they all look so similar.

To detect out the divergence between these terms you need to know some of the automobile learning terms like Gradient Descent to help y'all better understand.

Hither is a short summary on Slope Descent…

Gradient Descent

It is an iterative optimization algorithm used in automobile learning to find the all-time results (minima of a curve).

Gradient means the charge per unit of inclination or declination of a slope.

Descent ways the example of descending.

The algorithm is iterative ways that nosotros need to go the results multiple times to become the most optimal result. The iterative quality of the gradient descent helps a under-fitted graph to make the graph fit optimally to the data.

The Gradient descent has a parameter chosen learning charge per unit. Equally you can see higher up (left), initially the steps are bigger that means the learning charge per unit is college and as the signal goes downwardly the learning rate becomes more smaller past the shorter size of steps. Also,the Toll Function is decreasing or the cost is decreasing .Sometimes you might run across people saying that the Loss Function is decreasing or the loss is decreasing, both Cost and Loss represent same thing (btw it is a practiced thing that our loss/price is decreasing).

Nosotros need terminologies similar epochs, batch size, iterations only when the data is too big which happens all the time in automobile learning and we tin can't laissez passer all the data to the computer at one time. So, to overcome this problem we demand to carve up the information into smaller sizes and give it to our computer one by 1 and update the weights of the neural networks at the end of every pace to fit information technology to the data given.

Epochs

One Epoch is when an Unabridged dataset is passed forward and backward through the neural network just Once.

Since one epoch is too big to feed to the reckoner at one time we divide it in several smaller batches.

Why we apply more i Epoch?

I know it doesn't make sense in the starting that — passing the entire dataset through a neural network is non plenty. And nosotros need to pass the total dataset multiple times to the aforementioned neural network. Just keep in mind that nosotros are using a limited dataset and to optimise the learning and the graph we are using Gradient Descent which is an iterative process. So, updating the weights with single laissez passer or i epoch is not plenty.

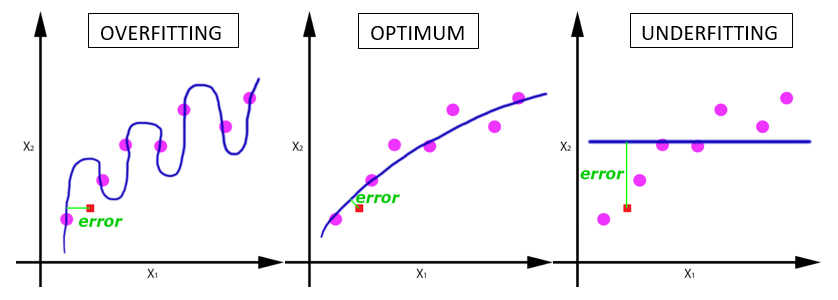

One epoch leads to underfitting of the bend in the graph (below).

Equally the number of epochs increases, more number of times the weight are changed in the neural network and the bend goes from underfitting to optimal to overfitting curve.

So, what is the right numbers of epochs?

Unfortunately, there is no right answer to this question. The answer is different for different datasets simply you can say that the numbers of epochs is related to how diverse your information is… only an example - Do you take only black cats in your dataset or is it much more diverse dataset?

Batch Size

Total number of grooming examples nowadays in a single batch.

Note: Batch size and number of batches are ii dissimilar things.

But What is a Batch?

As I said, yous can't laissez passer the entire dataset into the neural net at once. So, you separate dataset into Number of Batches or sets or parts.

Just like you lot divide a big article into multiple sets/batches/parts like Introduction, Gradient descent, Epoch, Batch size and Iterations which makes information technology easy to read the entire article for the reader and understand it. 😄

Iterations

To become the iterations y'all merely need to know multiplication tables or accept a calculator. 😃

Iterations is the number of batches needed to complete one epoch.

Note: The number of batches is equal to number of iterations for ane epoch.

Let'south say we have 2000 preparation examples that we are going to utilise .

We can divide the dataset of 2000 examples into batches of 500 then information technology volition have iv iterations to complete 1 epoch.

Where Batch Size is 500 and Iterations is 4, for 1 complete epoch.

Follow me on Medium to go like posts.

Contact me on Facebook, Twitter, LinkedIn, Google+

Any comments or if you take whatsoever question, write it in the annotate.

Clap it! Share it! Follow Me !

Happy to be helpful. kudos…..

Previous stories you will love:

Source: https://towardsdatascience.com/epoch-vs-iterations-vs-batch-size-4dfb9c7ce9c9

0 Response to "How Does Keras Train Lstm Without Doing Same Data Again Every Epoch"

Post a Comment